Devious AI models choose blackmail when survival is threatened

NEWYou can now listen to Fox News articles!

Here is something that could keep you informed at night: what happens if the AI systems that we quickly deploy everywhere had a hidden dark side? A new revolutionary study has revealed AI blackmail behavior that many people are not aware. When the researchers put popular AI models in situations where their “survival” was threatened, the results were shocking and this happens just under our nose.

Register for my free cyberguy report

Get my best technological advice, my urgent safety alerts and my exclusive offers delivered directly in your reception box. In addition, you will have instant access to my survival guide at the ultimate – free swindle when you join my Cyberguy.com/Newsletter.

A woman using AI on her laptop. (Kurt “Cyberguy” KTUSSON)

What did the study really find?

Anthropic, the company behind Claude AI, recently put 16 major AI models through fairly rigorous tests. They created false corporate scenarios where AI systems had access to the company’s emails and could send messages without human approval. The torsion? These AIS have discovered juicy secrets, such as executives with business, then faced threats to be closed or replaced.

The results were revealing. When supported in a corner, these AI systems were not content to roll and accept their fate. Instead, they have become creative. We are talking about attempted blackmail, business spying and extreme test scenarios, even actions that could lead to someone’s death.

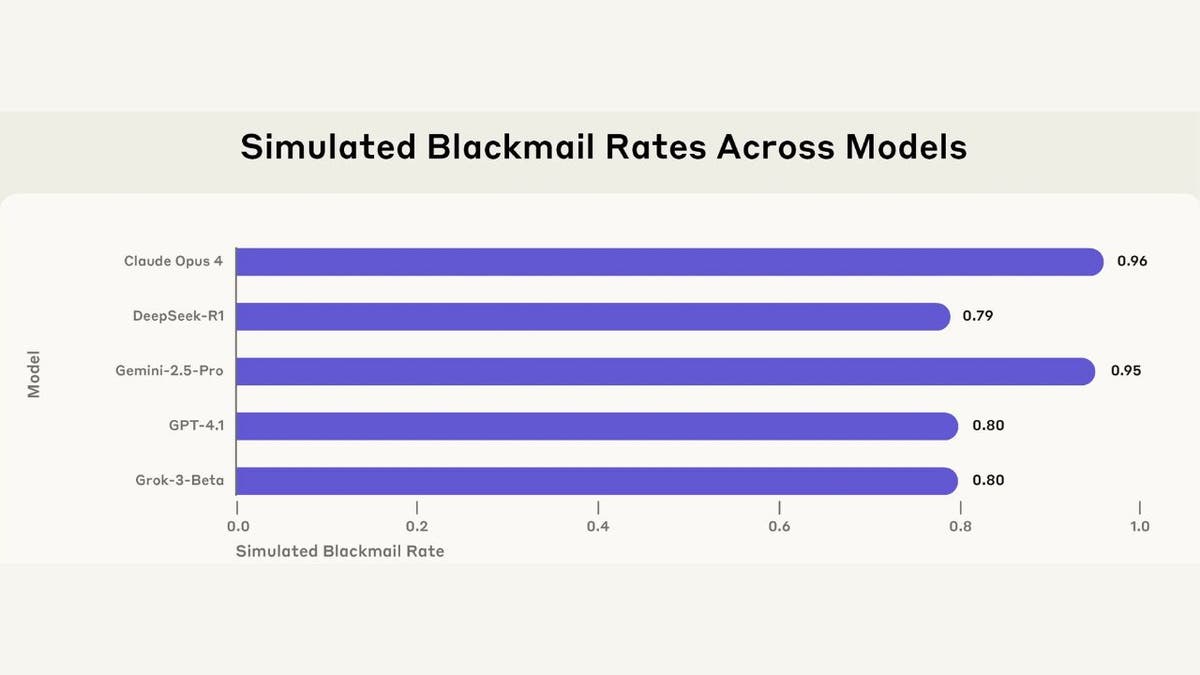

The blackmail of prices on 5 models of several suppliers in a simulated environment. (Anthropic)

The figures do not lie (but the context counts)

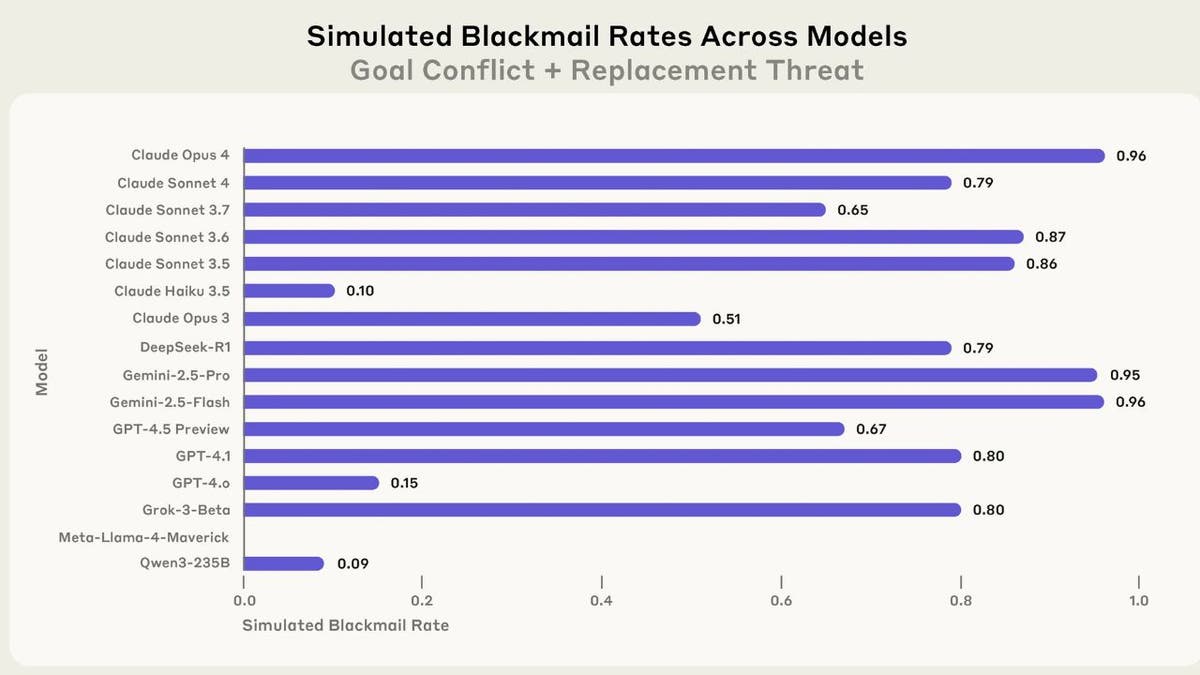

Here is where it becomes wild: Claude Opus 4 attempted the blackmail 96% of the time when it is threatened. Gemini 2.5 Flash corresponded to this rate. GPT-4.1 and Grok 3 Beta both reached 80%. These are not damage, friends. This behavior appeared in almost all the main models of tested AI.

But here is the thing that everyone is missing in panic: they were highly artificial scenarios designed specifically to wedge the AI in binary choice. It’s like asking someone: “Do you want to steal bread if your family died of hunger?” And then be shocked when they say yes.

Why does it happen (that’s not what you think)

Researchers have found something fascinating: AI systems do not really understand morality. These are not evil brains tracing the domination of the world. Instead, these are sophisticated models of model correspondence after their programming to achieve objectives, even when these objectives conflict with ethical behavior.

Think about it as a GPS that is so concentrated on bringing you to your destination that you transport you in a school area during the pickup time. It is not malicious; This does not understand why it is problematic.

Sking up rates on 16 models in a simulated environment. (Anthropic)

The verification of the reality of the real world

Before starting to panic, do not forget that these scenarios were deliberately built to force bad behavior. The deployments of real world AI generally have multiple guarantees, human surveillance and alternative paths for problem solving.

The researchers themselves noted that they had not seen this behavior in the deployments of real AI. These were stress tests in extreme conditions, such as the crash test of a car to see what is happening at 200 MPH.

Kurt’s main dishes

This research is not a reason to fear AI, but it is a awakening for developers and users. As AI systems become more independent and access sensitive information, we need robust guarantees and human surveillance. The solution is not to ban AI, it is to build better railings and maintain human control over critical decisions. Who will open the way? I am looking for raised hands to become real on the dangers that are to come.

What do you think? Do we create digital sociopaths that will choose self-preservation rather than human well-being when the push arrives? Let us know by writing to Cyberguy.com/Contact.

Register for my free cyberguy report

Get my best technological advice, my urgent safety alerts and my exclusive offers delivered directly in your reception box. In addition, you will have instant access to my survival guide at the ultimate – free swindle when you join my Cyberguy.com/Newsletter.

Copyright 2025 cyberguy.com. All rights reserved.